Context-Aware AI agent: Memory Management and state Tracking

Oct 28, 2024 by Sabber ahamed

Context awareness has become a crucial feature that distinguishes sophisticated systems from basic chatbots. This article I will guide you through creating a context-aware AI agent using Large Language Models (LLMs), combining theoretical understanding with practical pseudocode implementation.

Table of Contents

- Understanding Context Awareness

- System Architecture

- Implementation Guide

- Best Practices

- Advanced Techniques

- Testing and Optimization

Understanding Context Awareness

Let me give you some context about about what I meant about context awareness in AI agents. Context awareness mainly refers to the Agent's ability to:

- Maintain conversation history

- Remember user preferences (e.g., likes, dislikes, event etc)

- Understand temporal and situational events

- Track and update conversation state

- Handle context switches gracefully

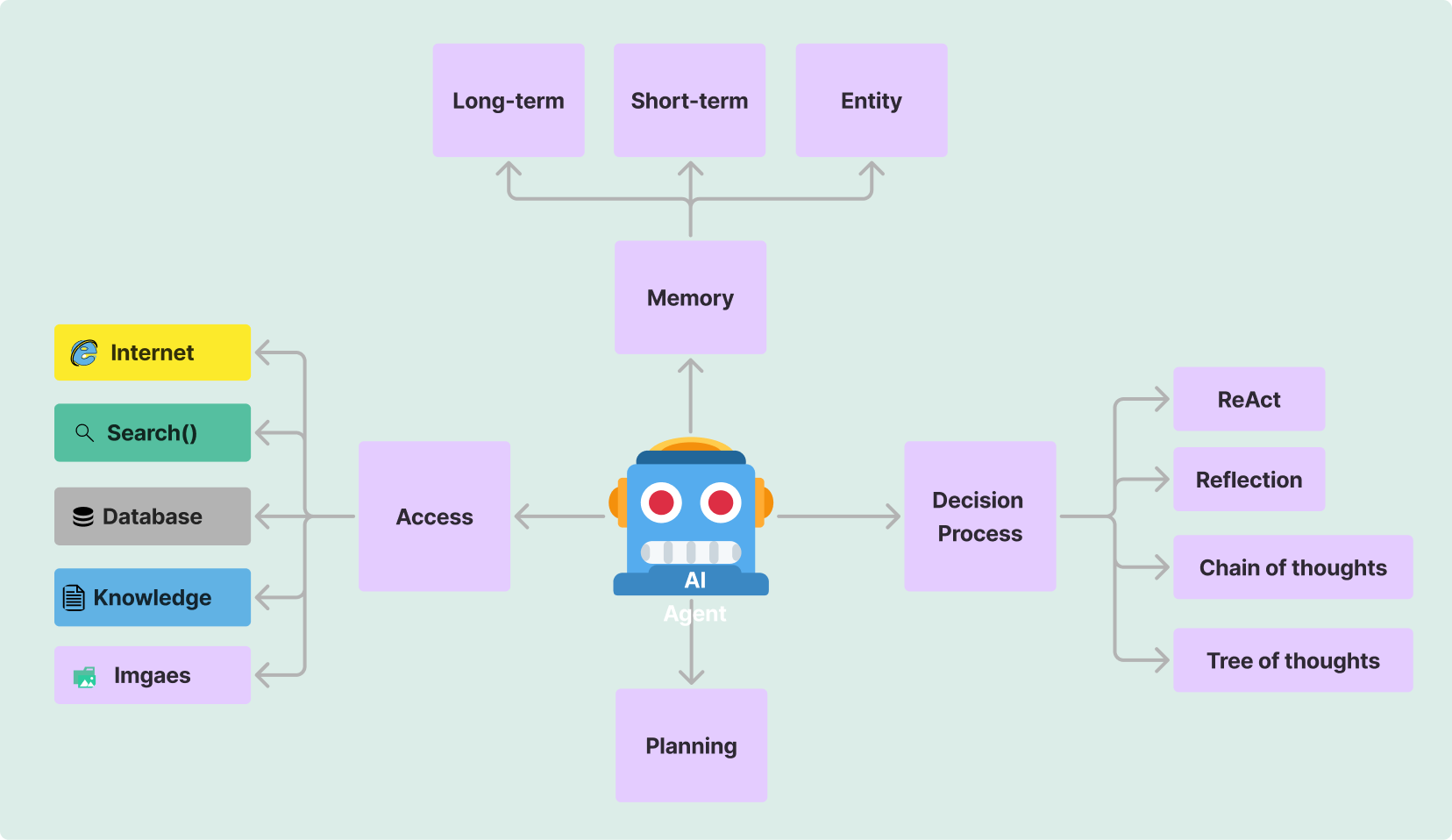

The following illustration shows the visual representation of how context flows in an AI agent:

The above diagram shows the flow of context in an AI agent system. The user input is processed by the NLU agent, which then interacts with the context manager, memory system, and state tracker. The core agent processes the input based on the context, and the output is generated accordingly.

Think like this process is like two people talking to each other, where one person remembers the previous conversation and uses that information to respond appropriately.

Design an Agent system

Let's build a context-aware system using modern LLM technologies. Here's our basic architecture:

from typing import List, Dict, Optional

import openai

from datetime import datetime

class ContextManager:

def __init__(self):

self.conversation_history: List[Dict] = []

self.user_preferences: Dict = {}

self.current_context: Dict = {

'timestamp': None,

'topic': None,

'state': None

}Conversation History Management

Memory management is crucial for maintaining context. There are different types of memory systems that can be used:

- short-term memory: This type of memory is used to store information for a short period of time.

- long-term memory: This type of memory is used to store information for a long period of time.

- episodic memory: This type of memory is used to store information about events and experiences.

- semantic memory: This type of memory is used to store general knowledge, facts, and concepts.

Here's a simple implementation of a conversation manager:

class ConversationManager:

def __init__(self, max_history: int = 10):

self.max_history = max_history

self.history = []

def add_interaction(self, user_input: str, agent_response: str):

interaction = {

'timestamp': datetime.now(),

'user_input': user_input,

'agent_response': agent_response

}

self.history.append(interaction)

# Maintain history size

if len(self.history) > self.max_history:

self.history = self.history[-self.max_history:]

def get_context_window(self, window_size: int = 3) -> List[Dict]:

"""Return recent conversations for context"""

return self.history[-window_size:]Context-Aware Prompt Generation

To generate context-aware prompts for the LLM, we need to combine the conversation history, user preferences, and current user input. Here's a simple function to generate a contextual prompt:

def generate_contextual_prompt(

user_input: str,

conversation_history: List[Dict],

user_preferences: Dict

) -> str:

# Format conversation history

history_text = '

'.join([

f"User: {interaction['user_input']}

"

f"agent: {interaction['agent_response']}"

for interaction in conversation_history

])

# Create system prompt with context

prompt = f"""

Previous conversation:

{history_text}

User preferences:

{user_preferences}

Current user input:

{user_input}

Provide a contextually relevant response that takes into account

the conversation history and user preferences.

"""

return promptImplementation Guide

Now let's combine all the components described above. The following pseudocode outlines the implementation of a context-aware AI agent using LLMs:

class ContextAwareagent:

def __init__(self, api_key: str):

self.conversation_manager = ConversationManager()

self.context_manager = ContextManager()

openai.api_key = api_key

async def process_input(self, user_input: str) -> str:

# Get relevant context

recent_history = self.conversation_manager.get_context_window()

user_prefs = self.context_manager.user_preferences

# Generate contextual prompt

prompt = generate_contextual_prompt(

user_input,

recent_history,

user_prefs

)

# Get response from LLM

response = await self.get_llm_response(prompt)

# Update conversation history

self.conversation_manager.add_interaction(user_input, response)

return response

async def get_llm_response(self, prompt: str) -> str:

try:

response = await openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a helpful agent."},

{"role": "user", "content": prompt}

],

temperature=0.7,

max_tokens=150

)

return response.choices[0].message.content

except Exception as e:

return f"Error: {str(e)}"Create your own AI agent

We created getassisted.ai for building a seamless multi-agent system. You do not need to write any code. The goal is to create an assistant that helps you learn any niche topics. Whether you're a researcher, developer, or student, our platform offers a powerful environment for exploring the possibilities of multi-agent systems. Here is the link to explore some of the: assistants created by our users.

Conclusion

Creating a context-aware AI agent requires careful consideration of various components:

- Robust context management

- Efficient memory systems

- Dynamic context weighting

- Error handling and recovery

- Performance optimization

By following the implementation guidelines and best practices outlined in this article, you can create a sophisticated AI agent that maintains context effectively and provides more natural, coherent interactions.